This article was co-edited by: Blake, Gao Fei

Pedro Domingos is a professor of computer science and engineering at the University of Washington and co-founder of the International Machine Learning Association. He received a master's degree in electrical engineering and computational science from IST Lisbon and a doctorate in information and computational science from the University of California, Irvine. He then worked for IST as an assistant professor for two years and joined the University of Washington in 1999. He is also the winner of the SIGKDD Innovation Award (the highest award in the field of data science) and one of the AAAI Fellow. Lei Fengwang Note: This article is the content of the machine learning lecture made by Pedro Domingos at Google.

Let's start with a simple question. Where does the knowledge come from? The three previously known sources are :

1. Evolution - from your DNA

2. Experience - from your nerves

3. Culture - This knowledge comes from communicating with others, reading, studying, etc.

Almost everything in our daily life comes from these three aspects of knowledge, and the fourth source that has recently emerged is the computer. Now more and more knowledge comes from computers (this knowledge is also discovered by computers).

The emergence of computer sources is a very big change for the first three, and evolution naturally exists on the earth. Experience is the reason that distinguishes us from animals and insects. Culture is what makes us human.

The difference between each of the four types and the former is an order of magnitude difference, and the latter can also find more knowledge. Computers are orders of magnitude faster than the previous three, and can coexist with several other implementations.

Yann Lecun - Director of the AI ​​Research Group at Facebook

Most of the knowledge in the future world will be extracted by the machine and will remain in the machine.

So, machine learning is not only a great change for computer scientists, but also for ordinary people, they need to understand.

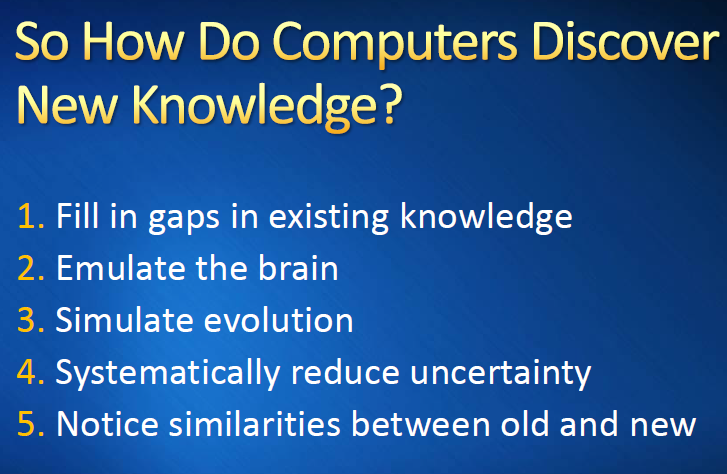

So how do computers discover new knowledge?

1. Fill gaps in existing knowledge

Work in much the same way that scientists work, observing - making assumptions - explaining by theory - success (or failure, trying new ones), etc.

2. Brain simulation

The world's greatest learning machine is the human brain, so let us reverse engineer it.

3. Simulate the evolutionary process

The evolutionary process is, in some ways, even greater than the human brain (because it creates your brain, your body, and everything else on Earth), so this process is worthy of a clear understanding and use Computer to do the operation.

4. systematically reduce uncertainty

The knowledge you learn may not be correct. When you get something from the data, you cannot be completely sure about it. So use the probability to quantify this uncertainty, and when you see more evidence, the probabilities of different hypotheses can be further refined. Bayesian theory can also be used to do this work.

5. Pay attention to the similarities between old and new knowledge

To reason by analogy, there is evidence in psychology that humans often do this. When you face a situation, you look for similar situations in past experience and then connect the two.

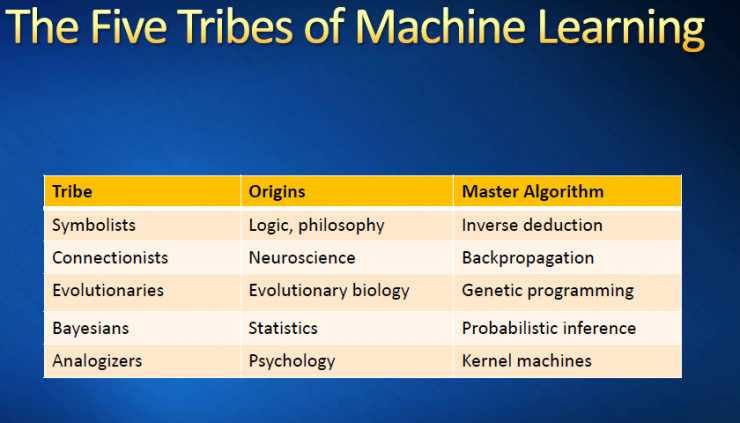

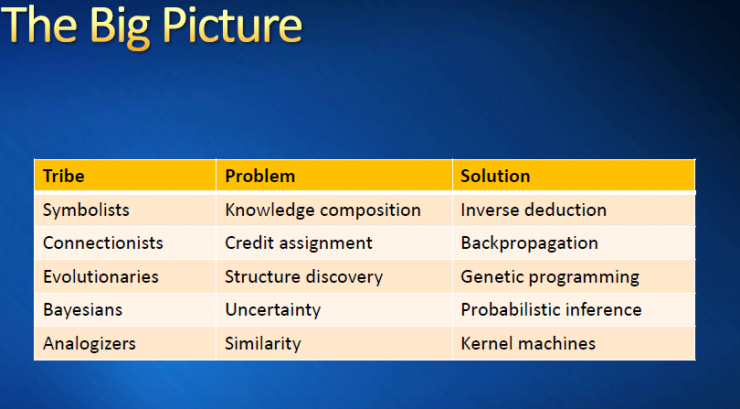

Five major schools of machine learning (main algorithm)

Symbolism - logic, philosophy - reverse deduction

Believing to fill gaps in existing knowledge

Connectionism - Neuroscience - Back-propagation

Hope to be inspired by how the brain works

Evolutionism - Evolutionary Biology - Genetic Coding

Genetic algorithm

Bayesian - Statistics - Probability Reasoning

Behavioral reasoning - psychology - machine kernel (support vector machine) Â

Symbolism represents people:

Tom Mitchell, Steve Muggleton, Ross Quinlan

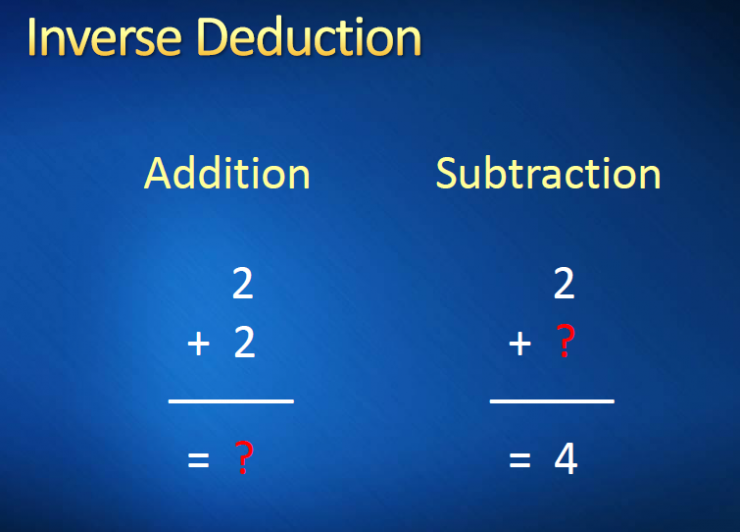

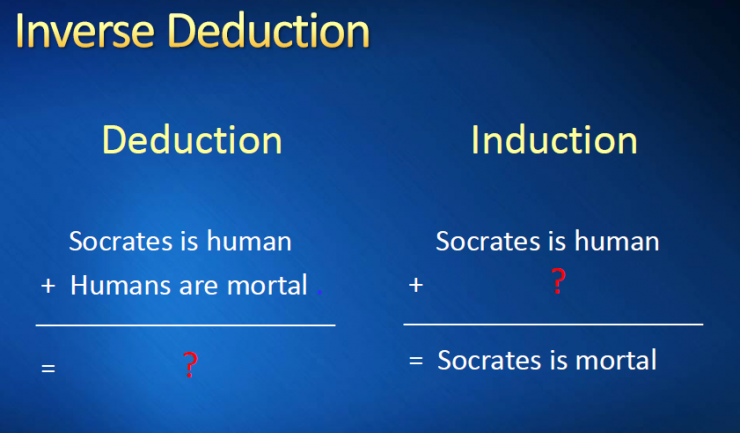

Reverse deduction

Tom Mitchell, Steve Muggleton, and Ross Quinlan think that learning is a process of reverse deduction. Reasoning is derived from general rules to specific facts. In contrast, summing up is just the opposite. General principles are derived from specific facts. We can use the opposite relationship between subtraction and addition to push down the rationale for reasoning.

Reverse Direction Example:

Socrates is human + human is mortal = Socrates is mortal

(But computers can't understand natural language yet)

Find the biologist in the picture

In fact, that machine, the machine in the picture is a complete, automatic biologist, it is also learning from the molecular biology of DNA, protein, RNA. Use the inverse deduction to make assumptions and design experiments to test whether these assumptions hold (without the help of humans). It then gives the results, refines the hypotheses (or proposes new ones).

Connectionist representatives include:

Geoff Hinton, Yann Lecun, Yoshua Bengio

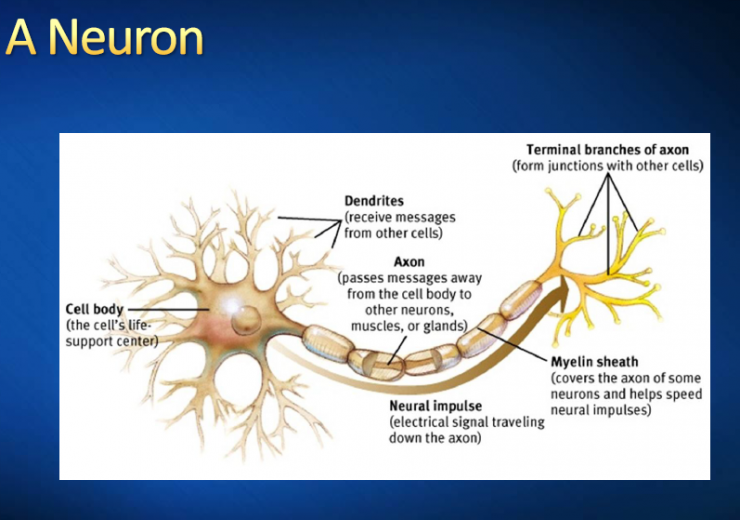

Single neuron

Neurons are very interesting cells that look like trees. Neurons are cells with long synapses (axons), which consist of cell bodies and cell protrusions. A sheath is sheathed on long axons and forms nerve fibers. The tiny branches at the ends are called nerve endings. Cell protrusions are elongated sections that extend from the cell body and can be divided into dendrites and axons. Each neuron can have one or more dendrites that can receive stimulation and transmit the excitation into the cell body. There is only one axon in each neuron, which can transfer excitement from the cell body to another neuron or other tissue, such as muscle or glands. The neurons are interconnected, which forms a large neural network. Almost all of the knowledge that humans learn is in synapses between neurons. The entire learning process is basically a process in which one neuron helps the other to transmit signals.

Artificial neuron model

Artificial neurons work process: the input weighted combination,

For example, each input is a pixel, each of which is weighted and combined, and when it exceeds the threshold, it will get the result of outputting 1, otherwise it will get the result of 0.

Again if the input is a cat, when all the weights combined exceed the threshold, the neuron can recognize it: this is a cat.

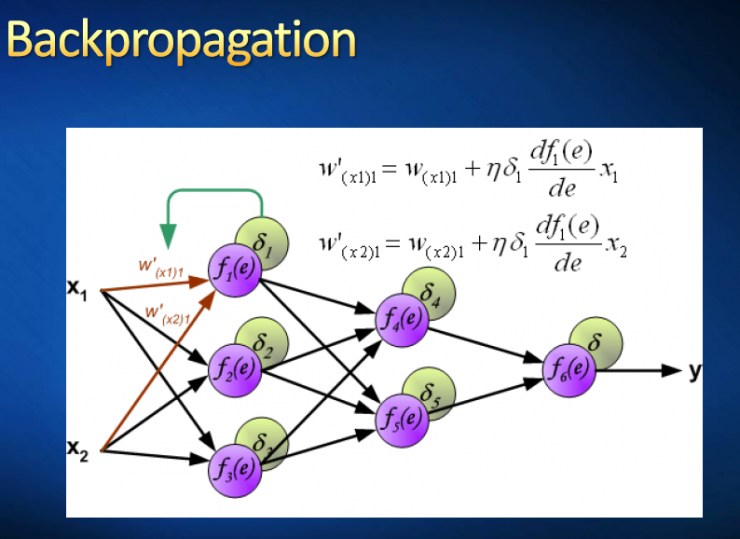

Backward propagation

Question one: How do you train these neural networks?

Neural networks have a large number of neurons that need to be calculated one layer at a time to get their output.

Question two: What if there is an error in the calculation? How to adjust in a large, disordered neural network to get the right answer?

When something goes wrong and the neuron should have signaled, it doesn't actually. The neuron in question may be any one of the entire network, but it is very difficult to find out. This is the problem that back propagation can solve. When people designed neural networks in the 1960s, they did not think of this method of back propagation. It was ultimately proposed by David Rumelhart et al. in the 1880s.

The basic idea of ​​back propagation is very intuitive. For example, the ideal output should be 1, but the actual output is 0.2, which needs to be increased.

Question 3: How to adjust the weight to increase it?

The subsequent forward neurons of the neuron feed back, layer by layer until the value is close to the real value, which is the back propagation algorithm (which is also the core of deep learning).

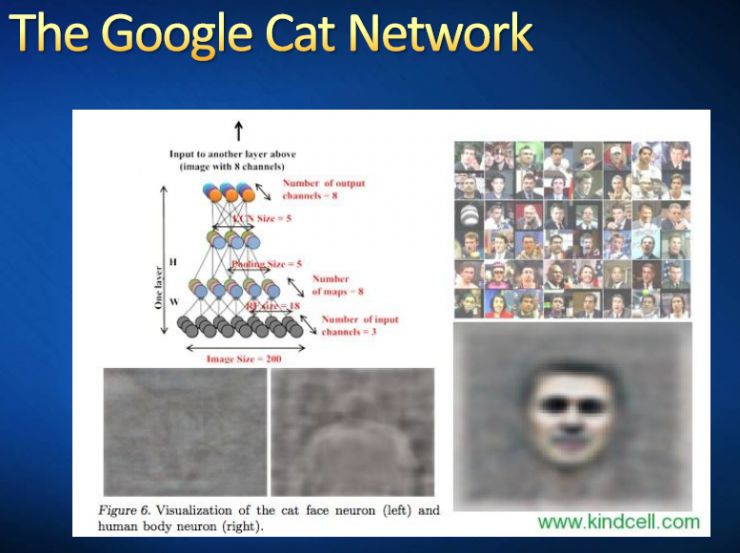

In the recent past, deep learning has been used in various fields such as stock market forecasting, search, advertising, video recognition, and semantic recognition. But for the public, the most famous should be Google's neural network that recognizes cats — at the time it was the largest neural network ever (possibly exceeding 1 billion parameters).

Evolutionary Representatives: John Holland, John Koza, Hop Lipson

Evolutionary theory believes that counter-propagation only adjusts weights in the model, but does not entirely understand what the true source of the brain is. So figure out how the entire evolutionary process works and simulate the same process on the computer.

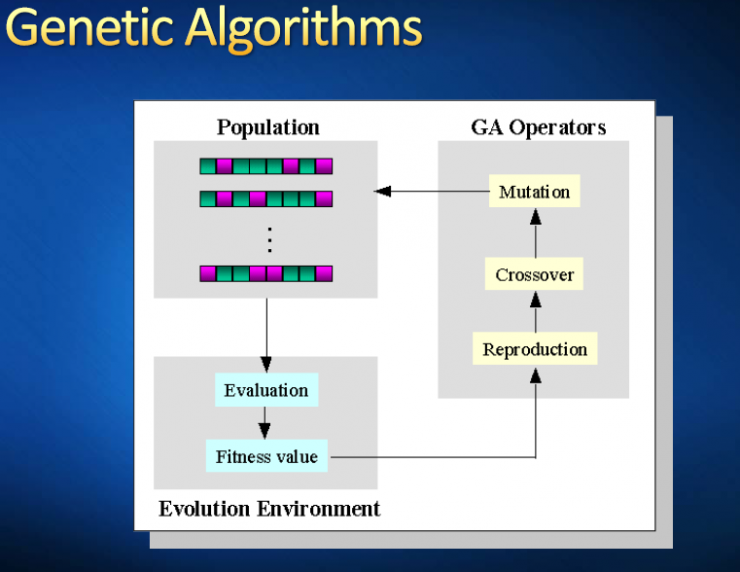

How does a genetic algorithm work?

Genetic Algorithm is a computational model that simulates the natural selection and genetic mechanism of Darwinian biological evolution. It is a method of searching for optimal solutions by simulating natural evolutionary processes. A genetic algorithm starts with a population that represents the potential solution set of a problem, while a population consists of a number of individual genes that are gene-encoded. Each individual is actually a chromosome-characterized entity. Chromosome as the main carrier of genetic material, that is, a collection of multiple genes, its internal representation (ie, genotype) is a combination of genes that determine the external appearance of the individual's shape, such as the characteristics of black hair is controlled by the chromosome A characteristic combination of genes is determined. Therefore, the mapping from the phenotype to the genotype, ie, the coding work, needs to be implemented at the beginning. Different people are distinguished by their genes, but unlike humans, the constituent elements of a computer are only the bit symbols (0 and 1). Genetic Algorithm is a randomized search method that evolved from the evolutionary law of the biosphere (survival of the fittest, genetic mechanism of survival of the fittest). It was first proposed by Professor J.Holland of the United States in 1975. Its main feature is that it directly operates on structural objects. There is no definition of derivation and function continuity; it has inherent implicit parallelism and better global search ability. The use of a probabilistic optimization method can automatically obtain and guide the optimized search space and adaptively adjust the search direction without the need for definitive rules. These properties of genetic algorithms have been widely used in combinatorial optimization, machine learning, signal processing, adaptive control, and artificial life. It is a key technology in modern intelligent computing.

Genetic manipulation is the practice of simulating the genetic inheritance of biological genes. In genetic algorithms, after coding the initial population, the task of genetic manipulation is to impose certain operations on individuals in groups according to their fitness to the environment (fitness assessment), so as to achieve the evolution process of survival of the fittest.

Genetic coding

Because the work of encoding genetic code is very complicated, we often simplify it. For example, after the generation of the initial population, according to the principle of surviving the fittest and survival of the fittest, generation evolution produces better and better approximation solutions. In the first generation, individuals are selected according to the size of the individual's fitness in the problem domain, and crossovers and mutations are generated by means of genetic operators of natural genetics to produce representative new ones. The solution set of the population. This process will result in the fact that the population-like natural evolutionary mesozoic population is more adaptable to the environment than the previous generation, and that the best individuals in the last population are decoded and can be used as a problem to approximate the optimal solution.

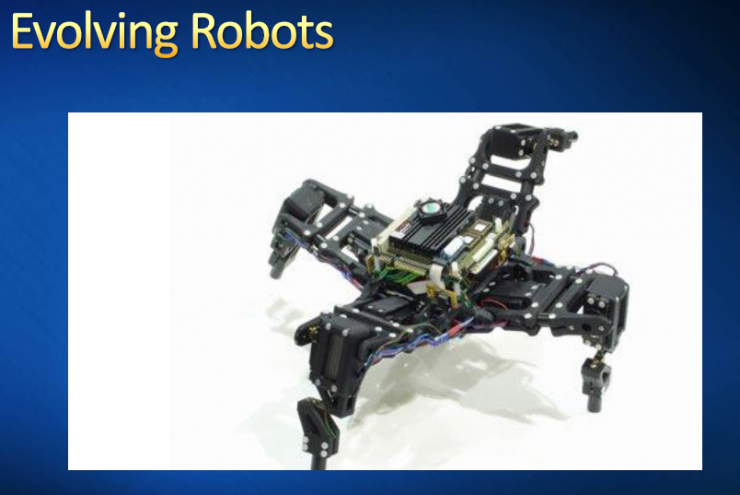

At the moment, genetic algorithm experts are not satisfied with simulating on a computer. They have brought their own technology to the real world, robotics. They started with an ordinary robot model. When they were trained well enough, the whole robot was printed out by 3D printing technology, and the printed robot really could crawl and move. (Hod Lipson Labs) Although these robots are not good enough now, they have evolved quite quickly compared to when they were just starting out.

Bayesian representative: David Heckerman Judea pearl Micheal Jordan

Bayes has always been a niche area, with Jude Pearl being the Turing Award winner.

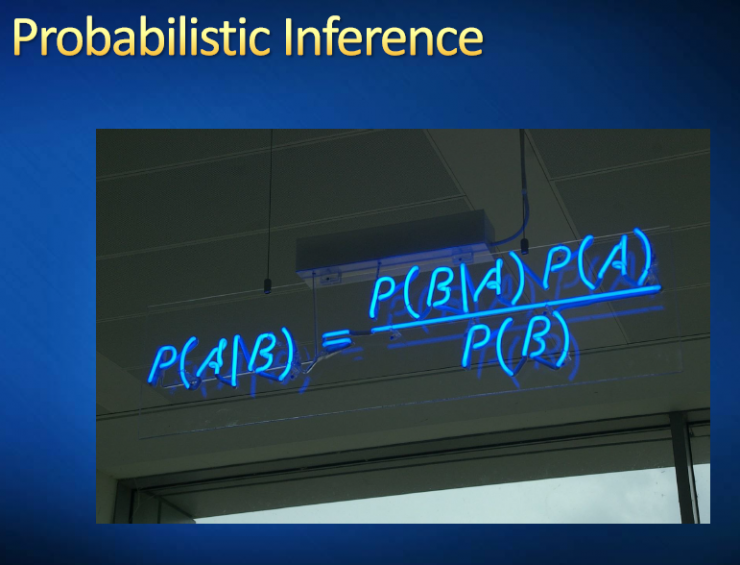

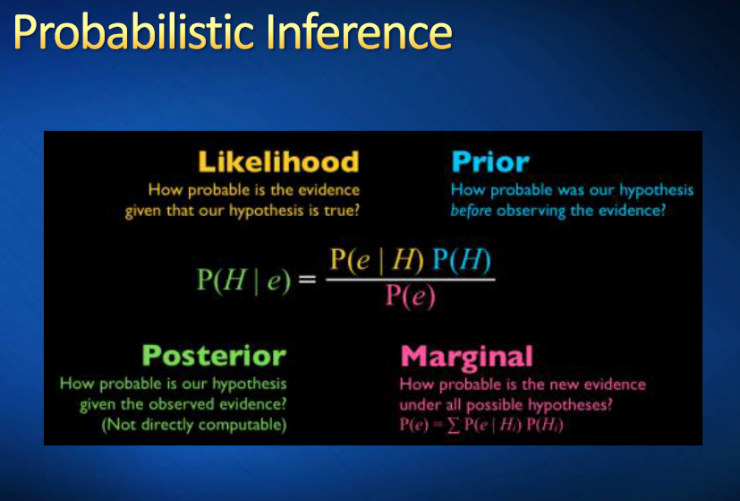

Bayesian theory

Bayes' theorem is a theorem in probability theory, which is related to the conditional probability of machine variables and the distribution of marginal probability. In some explanations of probabilities, Bayes' theorem can tell us how to use new evidence to modify existing opinions.

Where P(A|B) is the probability that A will occur when B occurs.

In Bayesian theorem, each noun has a conventional name:

P(A|B) is the conditional probability that A is known to have occurred after B, and is also called the posterior probability of A due to the value obtained from B.

P(B|A) is the conditional probability that B is known to have occurred after A is also known as the posterior probability of B due to the value obtained from A.

P(A) is A's prior probability or (or marginal probability). It is called "a priori" because it does not consider any B factor.

P(B) is the prior probability or marginal probability of B.

Posterior probability = (priority of similarity) / normalized constant

That is, the posterior probability is proportional to the product of prior probability and similarity.

In addition, the ratio P(B|A)/P(B) is also sometimes referred to as a standardised likelihood, and the Bayes' theorem may be expressed as:

Posterior probability = standard similarity prior probability

Bayesian learning has been applied in many fields. For example, Bayesian learning mechanisms are available in the "brain" of autonomous vehicles. Thus, to some extent, Bayes' theorem plays a major role in helping to drive a vehicle or helping a vehicle learn how to drive.

Bayesian Learning Mechanism Application - Spam Filter

However, a Bayesian learning mechanism that everyone is familiar with is spam filter. The first spam filter was designed by David Heckerman and colleagues. They only use a highly recommended Bayesian learning machine, the naive Bayesian classifier. Here's how the classifier works: It is based on the assumption that an email is spam or a message is not spam, but this assumption is made before we examine the content of the email. The prior probability is: When you determine that the prior probability of an email as spam is 90%, 99%, 99.999%, your assumption is correct. The evidence to prove the correctness of this hypothesis lies in the true content of the mail. For example, when the content of the email contains the word "Viagra", the email will be judged as spam to a large extent; when the email contains the word "FREE", the email will be largely Judged to be spam; when four exclamation points appear after the word “FREEâ€, this email will be judged as spam to a large extent. When the name of your best friend appears on the email, this will reduce the probability that the email will be considered spam. Therefore, the primary Bayesian classifier contains these "evidences." At the end of the day, the classifier will calculate the probability that a message is spam or non-spam. Based on the calculated probability, the classifier decides whether to filter the message or send it to the user. Spam filters allow us to effectively manage our own mailboxes.

At the moment, various different algorithms are applied to spam filters. However, the Bayesian learning mechanism is the first algorithm to be applied to spam filtering and is used in many other spam filtering filters.

Behavioral analogy

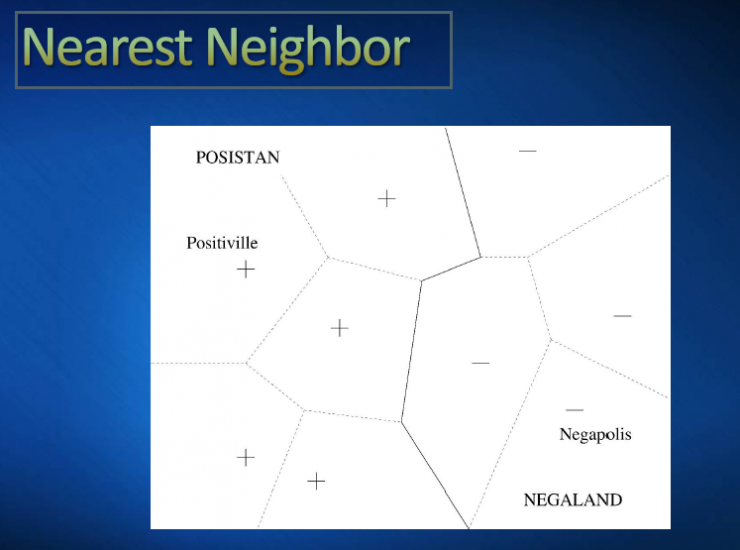

Finally, as I mentioned, the basic point of view of behavioralists is that everything we do and everything we learn is inferred through analogy. The so-called analogical reasoning approach is to observe the similarity between the new situation where we need to make a decision and the scenario we are already familiar with. One of the pioneers of early behavioral analogism was Peter Hart. He confirmed that some things are connected with the best neighboring algorithm. This algorithm is the first algorithm based on similarity, which will be explained in detail later. Vladimir Vapnik invented the support vector machine and kernel machine, becoming the most widely used and most successful similarity-based learning machine. These are the most primitive analogical inference forms. People, such as Douglas Hofstadter, are also committed to studying many sophisticated high-end learning machines. Douglas Hofstadter is not only a famous quantitative research scientist and computer scientist, but also the author of "Gödel, Escher, Bach". Its most famous book has 500 pages. The point of view in the book is that all intelligence is analogy. He strongly advocated analogy as the main algorithm.

Best neighbor algorithm

Kernel machine

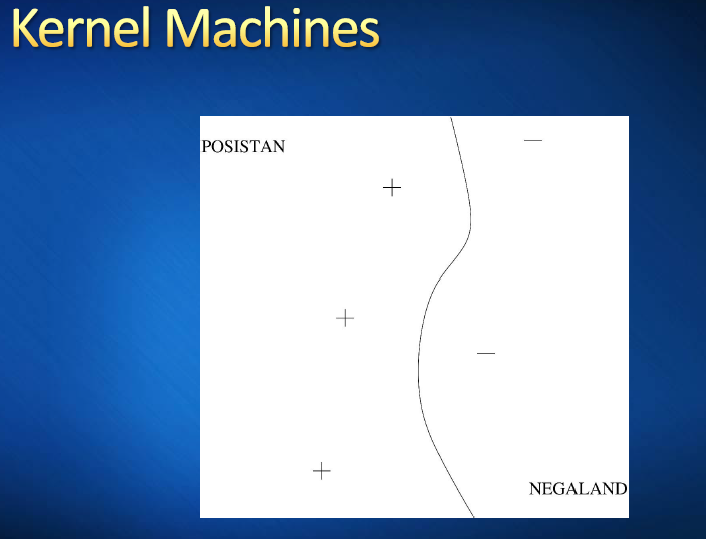

Understand examples of analogical reasoning, best neighbor algorithms and kernel machines

Here is a puzzle for understanding this point of view. Given two countries, given the positive and negative examples given, I gave them the imaginative names "Posistan" and "Negaland." In the figure, I will not give the border lines of the two countries and only give the location of the major cities of the two countries. Posistan’s main cities are marked with plus signs, Positiveville is the capital, and Negalan’s major cities are marked in the same way. The question is: If I give the main city, can you tell me the location of the boundary line? Of course, you can't give a definitive answer because these cities cannot determine the position of the boundary line. However, this is also the problem of machine learning. We must learn to summarize.

The best neighbor algorithm can provide a simple answer to this question. That is, if a point on the map is close to a positive city or any negative city, then we can assume that this point is in Posistan. This hypothesis will have the effect of dividing the map into neighboring cities in the city. In this way, Posistan will become a UN home in the neighborhood of these active cities. A city’s neighboring cities consist of those closest to it. Thus, a zigzag boundary line can be obtained. Although the best neighbor algorithm is so simple, it is surprising that this algorithm does not even play any role during the learning phase. Some of the inference processes involved in this issue are not idealized. One of them is that the resulting boundary may not be the most correct because the true boundary may be smoother. Second, if you look carefully at this map, you may abandon some cities, but this move will not have much impact on the final result. If the city is abandoned, it will be merged into the other two cities and the final boundary line will not change. The only things that need to be preserved are those that define the boundary lines, the so-called "support vectors". Usually, these vectors exist in hyperspace. Therefore, under normal circumstances, a large number of cities can be abandoned without any impact on the final result. However, in large data sets, discarding large amounts of data will have an impact on the final output value. Support vector machines, or simply kernel machines, will solve this problem. There is a learning program that can discard examples that are not necessary to define a boundary line and keep necessary examples so that a smooth boundary line can be obtained. In determining the boundary line, the support vector machine will maximize the distance between the boundary line and the nearest city. This is how the support vector machine works.

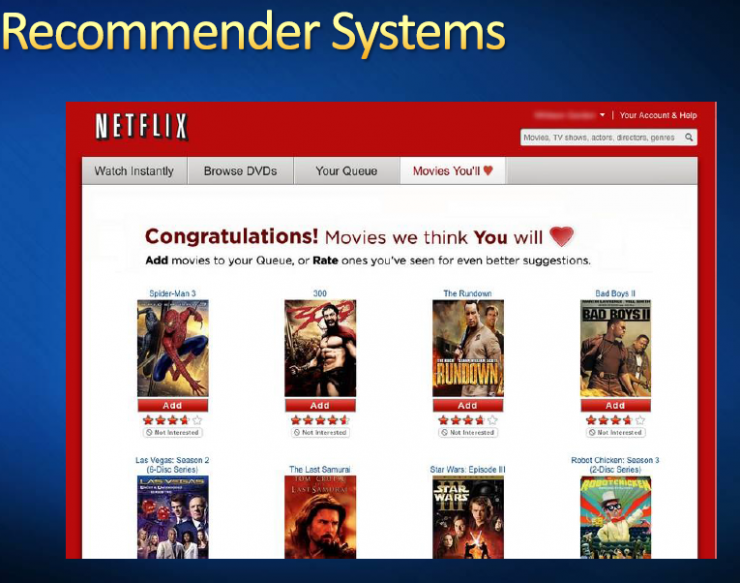

Recommended system

Prior to deep learning, support vector machines may be the most robust learning algorithm. People began to use this kind of analogy-based learning algorithm from the 1950s, so this learning algorithm is basically applicable to everything on the earth. We have all experienced application examples of this learning algorithm, although we may not realize that it is applied to analogy-based learning algorithms. This is the recommendation system. For example, I would like to find out what type of film is recommended for you. Of course, folk film has a history of 20 years and it is also a very simple form of film. I will not use the category of the film to recommend, because people's interests are complex and changeable, which will be a big problem. I will adopt a "collaborative filtering" method that finds five people who are interested in similar things to you. This means that you are giving them five stars for one movie and one star for another movie. If they are five stars to a movie you haven't seen before, I can assume that you will like that movie so that I can recommend it to you. This kind of "cooperative filtering" method using analogical reasoning has achieved excellent results. In fact, three-quarters of Netflix's business benefits from this recommendation system. Amazon also uses this recommendation system, which has brought about one-third of the effectiveness of its business development. Since these times, people have used various learning algorithms to implement this recommendation system, but the best neighbor algorithm is the earliest applied learning algorithm for this system, and it is also one of the optimal algorithms.

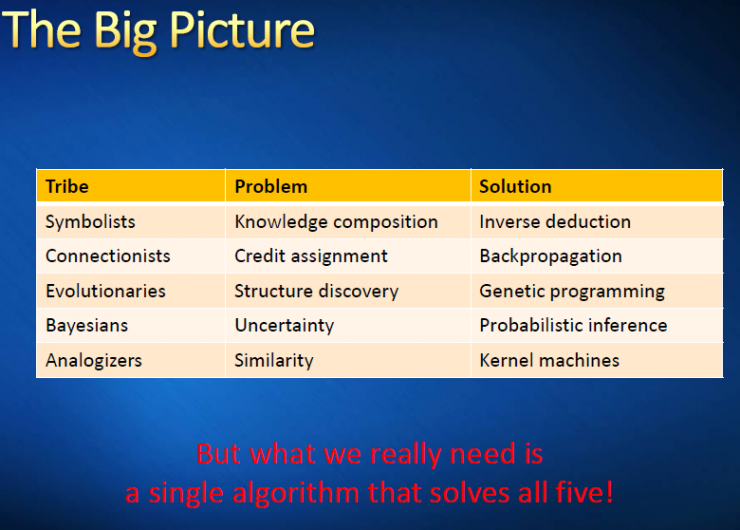

Machine Learning Five Major Schools, Problems and Solutions

Back again, we talked about the five major schools of machine learning. We found that each school has its own problem that can be better solved. Each genre has a specific master algorithm that solves the problem. For example, only the problem that symbolists can solve is to learn what can be organized in different forms. They use reverse reasoning to learn this knowledge. Joiners use back-propagation algorithms to solve credit distribution problems. Evolutionists solve the problems of learning structure. Joiners only start with a fixed structure and adjust the weight value. Evolutionists know how to use genetic programs to propose a learning structure. Bayesian learning mechanisms are all about researching uncertainties. They know how to deal with all the uncertainties. They can use a lot of data to know how to increase the probability of hypothesis. They use probabilistic reasoning. This method is very efficient in algorithms and can apply the Bayesian principle to oversized hypotheses. Eventually, behavioralists use the similarity between things to reason. They can summarize reasoning from one or two examples. The best analogy at the time was the core machine. However, I want to point out that because every problem that arises is true and important, there is no single algorithm that can solve these problems. What we really need is a single algorithm that can solve these five problems simultaneously. In this case, we need a theory of machine unification. In fact, we have made a lot of efforts towards this goal and achieved some successes, but we still have a long way to go.

How to turn five algorithms into zero

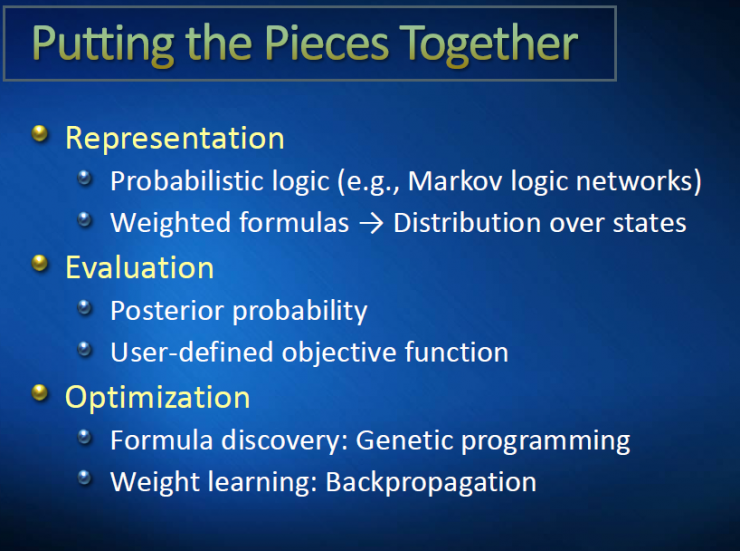

Below I will present to you the research status we are currently in. We have five algorithms or five types of learning methods, the key lies in how to unify them. It seems that this is a difficult problem. Some people even claim that this is an elusive goal. The reason why this goal seems to be difficult is because the five algorithms seem to be different. However, careful observation, there is still a connection between the five algorithms, they are composed of three identical components, namely, characterization, evaluation, optimization.

We will analyze what each component specifically refers to in order to achieve the unification of the five algorithms. Characterization refers to how learners express knowledge, models, and programming that are being learned. The programming language the learner will use to write the algorithm is not Java, or c++, or any similar language, but should be a logical language. Therefore, our primary task is to unify these characterization methods. The most natural approach is to use the symbolist representation method. Here we use a variant of first-order logic. The representation method used by Bayes is the image model. These characterization methods have been used extremely ubiquitously. If we can combine these two characterization methods, we can use to express any kind of thing. For example, any kind of computer programming can be expressed using first-order logic. Any method for dealing with indefinite things or weighing the evidence can be characterized by an image model. Now we have indeed achieved the goal of combining these two characterization methods. In fact, we have developed various forms of probabilistic logic. The most widely used is the Markov logic network, which is actually a combination of a logical network and a Markov network. The network is a very simple model, starting with formulas and first-order logic, and then assigning each rule to a weight value.

Next, the composition of any kind of learning algorithm is assessment. The evaluation is a fractional function that shows the performance of a candidate model. For example, whether the candidate model is related to data is consistent with my purpose. In fact, each learning problem lies in the ability to find programming that maximizes the evaluation function value. A more obvious candidate model is the posterior probability used by Bayes. In general, assessments should not be part of the algorithm. The assessment results should be provided by the user and the user decide what the learner should optimize.

The last component is optimization, which is to find a model that maximizes the value of the function. Therefore, there is a natural combination of genetic programming and back-propagation algorithms, namely to discover that we can use genetic programming formulas. In the first-order logic, each formula is a tree. We can go through these trees and apply genetic processes to formulate better formulas. I have a chain of reasoning that involves many different formulas, facts, and steps. All formulas, facts, and different steps are given a certain amount of weight. I can use the back propagation algorithm to learn these weights. We made a lot of efforts in this piece, but it has not yet been successful. However, some people think that achieving the goal of unifying the five algorithms into a single algorithm is only a matter of time. I am not particularly optimistic. Personally, I personally believe that even if we succeed in achieving the unification of the five paradigms, some major ideas will still be lost in the process. There may be ideas that we do not yet own. Without these ideas, we will not be able to have a real In the sense of a comprehensive learning mechanism.

On the Future Influence of Master Algorithm

I will briefly discuss the future impact of the master algorithm to end our speech today. Here I propose four terms. The first term is home robot . We all hope that we have a home robot that can cook and make beds for us, and so on. But why haven't we achieved this goal so far? First of all, to achieve this goal can not leave the machine learning, and today there is no any program that can make the robot do anything it wants to do. Second, our existing learning algorithms have yet to be optimized. Because home robots will encounter all five of these problems in one day's work tasks, it will require that they solve all the problems. Therefore, in the development of the main algorithm, we still need to make more efforts.

The second term is the web brain . Everyone, including Google, is trying to turn the network into a knowledge base. I want to ask questions and get answers instead of querying keywords and returning to the web. However, this requires that all knowledge in the network be characterized in a computer-intelligible manner, for example, first-order logic. On the other hand, the network is full of conflicts, noise, differences, and other factors, so I need to use probabilities to solve this problem. Therefore, it is necessary to unify the five learning algorithms so that knowledge can be extracted from the network.

The third term is cancer treatment . With regard to human health, the treatment of cancer may be the most important. However, why have we not yet found an effective treatment for cancer? The problem is that cancer is not a single disease, and everyone has different cancer conditions. In fact, during the development of a patient's condition, the same type of cancer will mutate. Therefore, a drug is unlikely to cure all cancers. A real cure for cancer, or at least more and more cancer researchers believe, will rely on a learning algorithm project that can include the patient's genome information, medical history, and tumor cell variation to predict use. Which drug can kill this tumor cell without harming the patient's normal cells, or using a series of drugs, or a combination of drugs, or designing a specific drug for a patient. To some extent, this is similar to the recommendation system for recommending books or movies to people, except that there is a need to recommend a drug. Of course, the issues involved here are more complex than how to recommend a drug, bibliography, or movie. You need to understand how cells work and how genes interact with proteins formed by cells. The good news is that we have a lot of data to achieve this goal, such as microarrays, sequences, and so on. However, based on our existing learning algorithm, we have not been able to achieve this goal. However, having a master algorithm will achieve this goal.

The fourth term is the 360 degree recommendation system . As a recommendation system, as a consumer, I hope to have a complete 360 ​​degree recommendation model for myself that can learn all the data I generate. This model is better understood than any small model. I, therefore, can provide me with a better recommendation service. I can recommend not only the details but also the work, house, and professional. Having such a recommendation system is like having a good friend in your life and can provide valuable advice for every step in your life. To achieve this goal, we not only need ever-growing data, we also need powerful algorithms to learn about this colorful model of humanity.

PS : This article was compiled by Lei Feng Network (search "Lei Feng Network" public number attention) , refused to reprint without permission!

Via Pedro Domingos

High Temperature Constant Test Chamber

High Temperature Constant Test Chamber,Pv Module Testing Test Box,Automotive Parts Testing Test Machine,Thermal Insulation Test Chamber

Wuxi Juxingyao Trading Co., Ltd , https://www.juxingyao.com