Abstract: This article provides an introduction to multi-layer perceptron and backpropagation. Artificial neural networks are computational models that inspire biological neural networks that process information from the human brain.

Artificial neural networks are computational models that inspire biological neural networks that process information from the human brain. Artificial neural networks have made a series of breakthroughs in speech recognition, computer vision and text processing, making machine learning research and industry excited. In this blog post, we will attempt to understand a specific artificial neural network called the MulTI Layer Perceptron.

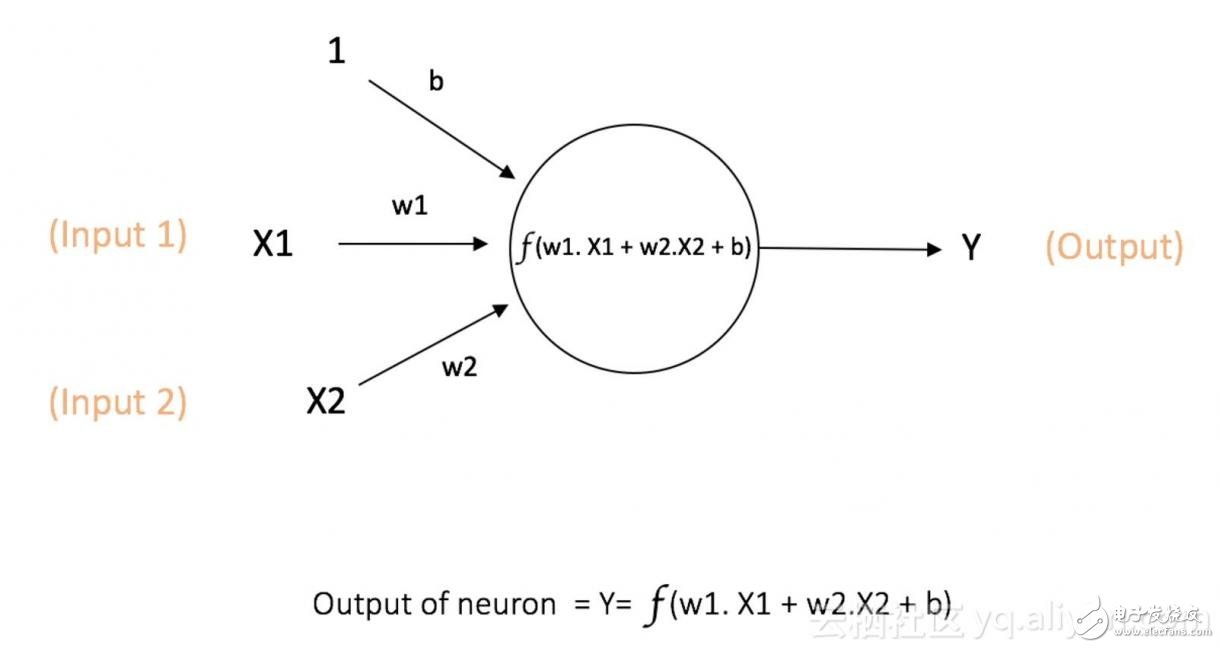

The basic unit of computation in a neural network is a neuron, commonly referred to as a "node" or "unit." A node receives input from another node, or receives input from an external source, and then calculates the output. Each input is accompanied by a "weight" (weight), which depends on the relative importance of the other inputs. The node applies the function f (defined below) to the weighted input sum, as shown in Figure 1:

Figure 1: Single neuron

This network accepts numerical inputs for X1 and X2 with weights of w1 and w2, respectively. In addition, there is an input 1 with a weight b (called "bias"). We will detail the role of "offset" later.

The output Y of the neuron is calculated as shown in Figure 1. The function f is non-linear and is called an activation function. The role of the activation function is to introduce nonlinearity into the output of the neuron. Because most real-world data is non-linear, we hope that neurons can learn nonlinear functional representations, so this application is crucial.

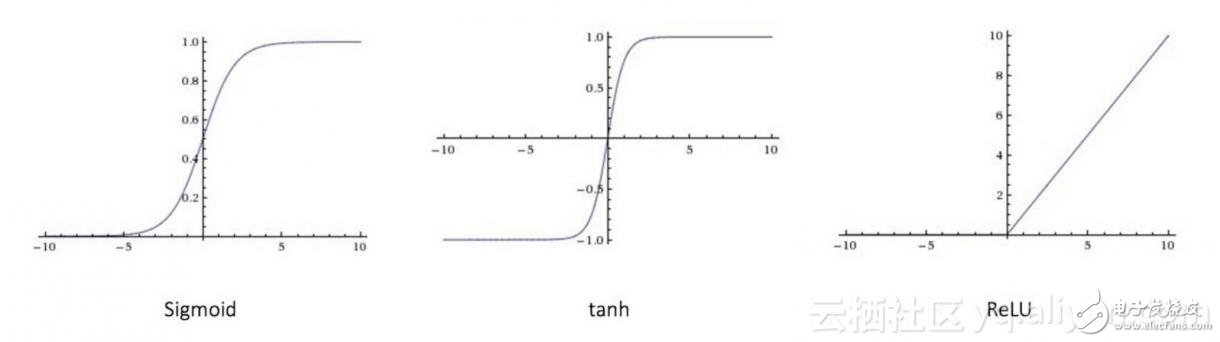

Each (non-linear) activation function receives a number and performs a specific, fixed mathematical calculation [2]. In practice, you may encounter several activation functions:

Sigmoid: Enter a real value and output a value between 0 and 1 σ(x) = 1 / (1 + exp(−x))

Tanh (hyperbolic tangent function): Enter a real value and output a value between [-1,1] tanh(x) = 2σ(2x) − 1

ReLU: ReLU stands for Corrected Linear Units. Output a real value and set a threshold of 0 (the function will change the negative value to zero) f(x) = max(0, x)

The following figure [2] shows the above activation function

Figure 2: Different activation functions.

Importance of Bias: The primary function of the bias is to provide each node with a trainable constant value (in addition to the normal input received by the node). The role of bias in neurons is detailed in this link:

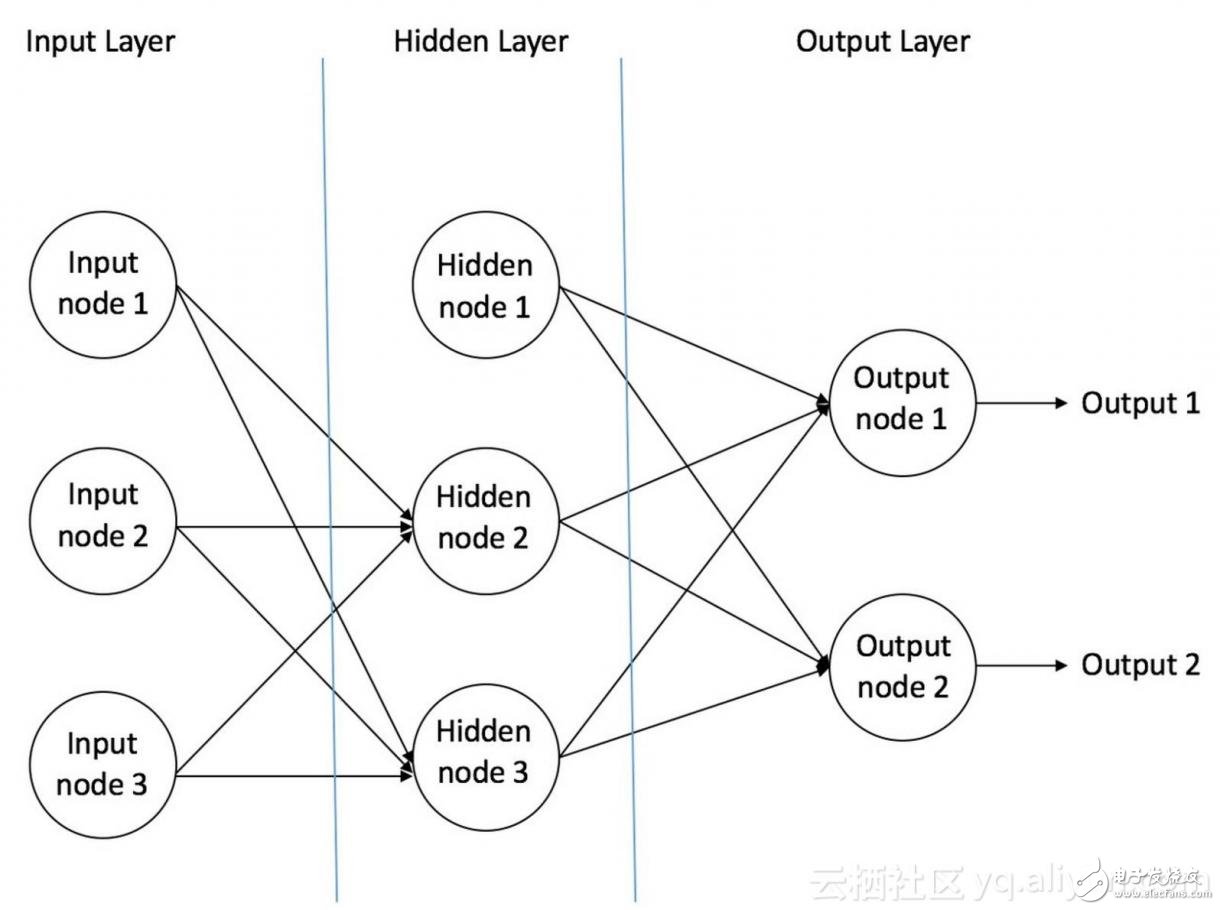

Feedforward neural networkFeedforward neural network is the first invention and the simplest artificial neural network [3]. It contains multiple neurons (nodes) arranged in multiple layers. Nodes in adjacent layers have connections or edges. All connections are weighted.

Figure 3 is an example of a feedforward neural network.

Figure 3: An example of a feedforward neural network

A feedforward neural network can contain three types of nodes:

1. Input Nodes: Input nodes provide information from the outside world, collectively referred to as the "input layer." In the input node, no calculations are made - only information is passed to the hidden nodes.

2. Hidden Nodes: Hidden nodes are not directly related to the outside world (hence the name). These nodes perform calculations and pass information from the input node to the output node. Hidden nodes are collectively referred to as "hidden layers." Although a feedforward neural network has only one input layer and one output layer, there can be no hidden layers in the network.

3. Output Nodes: The output nodes are collectively referred to as the "output layer" and are responsible for computing and passing information from the network to the outside world.

In a feedforward network, information moves only in one direction—from the input layer to the front, then through the hidden layer (if any) to the output layer. There are no loops or loops in the network [3] (this property of the feedforward neural network is different from the recurrent neural network, where the node connections form a loop).

The following are examples of two feedforward neural networks:

1. Single layer perceptron - This is the simplest feedforward neural network and does not contain any hidden layers. You can learn more about single-layer perceptrons in [4] [5] [6] [7].

2. Multilayer Perceptron - Multilayer Perceptron has at least one hidden layer. We will only discuss multilayer perceptrons below, as they are more useful than single-layer perceptrons in today's practical applications.

There are many different versions of DIN Connectors. The name of each type comes from the number of pins the connector has (3-pin DIN, 4-pin DIN, etc.) Some of these pin numbers come in different configurations, with the pins arranged differently from one configuration to the next.

DIN cable connector 3-pin, 4-pin, 5-pin, 6-pin, 7-pin, 8-pin degree 180, 216, 240, 262, 270

DIN cables, DIN connector, telephone cable, computer cable, audio cable

ETOP WIREHARNESS LIMITED , https://www.wireharnessetop.com